The Philosophy of Human-robot Interactions

It takes more than technology to support human-robot interaction. Designers are studying philosophy to apply how people and machines work together.

The field of human-robot interaction is vast and complex, addressing perception, decision-making, and action.

Findings related to cognitive psychology and philosophy are providing elements that converge with what research in human-robot interaction is proposing for designing controls for interactive robots.

How Humans and Robots Collaborate

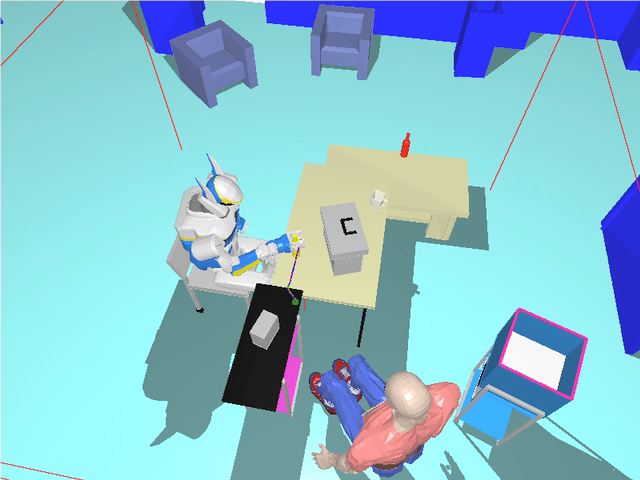

Consider, for example, a human and a robot working together to tidy up a tabletop (shown right). The human needs to get the bottle, which is out of reach for him, to put it in a bin.

Consider, for example, a human and a robot working together to tidy up a tabletop (shown right). The human needs to get the bottle, which is out of reach for him, to put it in a bin.

• What knowledge does the robot need about the human to help him in an effective way?

• What processes does the robot need to handle to manage successful interaction?

• How are these processes organized?

• Conversely, what information should humans possess to understand what a robot is doing and how a robot should make this information available to its human partner?

How Philosophy Applies to Robotics

Philosophers have explained in some detail how people cooperate and interact when working together. Human intentions, as they relate to carrying out joint actions, can be distinguished in three main stages:

1. A distal intentions level (D-intentions) in charge of the dynamics of decision-making through rational guidance and monitoring of actions;

2. A proximal intentions level (P-intentions) that inherits a plan from the previous level, and whose role is temporal and situational anchoring; and

3. A motor intentions level (M-intentions) that encodes the fine-grained details of the action, and is responsible for the precision and smoothness of action execution, and that operates at a finer time scale than either D-intentions or P-intentions.

This framework defining human intentions has interesting parallels to human-robot interaction. In fact, there is a clear convergence between a philosophical account of human-human action and human-robot action.

This framework defining human intentions has interesting parallels to human-robot interaction. In fact, there is a clear convergence between a philosophical account of human-human action and human-robot action.

The robotics community has addressed the problem of robot control in order to build consistent and efficient robot systems that integrate perception, decision, and action capacities, and provide for both deliberation and reactivity.

Directly corresponding to the above levels of intention, a three-layered robotics architecture defines the following:

1. A decision level that can produce a plan to accomplish a task, as well as supervise its execution, while at the same time, can be reactive to events from level 2 below. The coexistence of these two features raises the key challenge of their interaction and integration to balance deliberation and reaction at the decision level. This level feeds the next level with the sequence of actions to be executed.

2. An execution control level that controls and coordinates execution of functions distributed in operational modules, composing the next level according to the task requirements.

3. A functional or operational level that includes operational modules embedding robot action and perception capacities for data and image processing and motion control loops.

Of course, there is much more involved when diving deeper into the philosophical aspects related to developing human-robot interaction, as well as determining the robot capabilities involved for humans and robots to work collaboratively.

Having a clear understanding of human interactions has become a foundation for creating robot controls that address key design elements needed for safe and effective human-robot interaction, and that are capable of meeting new challenges as robots become more advanced, including the ability to learn from increased interaction with humans.